Andrew Snyder-Beattie is Director of Research at the Future of Humanity Institute, a multidisciplinary research institute at the University of Oxford that aims “to bear on big-picture questions about humanity and its prospects.” The following is an excerpt from one of multiple conversations between artist Cécile B. Evans and Snyder-Beattie (in 2015) about who gets to be a part of humanity’s future—or if there even will be one. All views expressed are explicitly Snyder-Beattie’s own.

Cécile B. Evans: I was watching philosopher Nick Bostrom, the Director of the Future of Humanity Institute, presenting the department at Oxford in 2010. He started his lecture with Pieter Bruegel’s painting of Icarus flying too close to the sun. The gods tell Icarus, “If you fly too close to the sun, your wings will melt and you’ll fall back to Earth and die.” Bostrom breaks down three different interpretations of the painting itself. The hubris interpretation: people are too confident. The indifference interpretation: people are selfish assholes—you can tell this by the workers in the painting who pay no mind to Icarus’ demise. And the obliviousness interpretation: people are completely ignorant to larger factors at play—here the gods and demigods—and continue to toil away. Any one of these three positions could be argued correctly or incorrectly. At what point do you know which interpretation to choose and act upon? What happens after you’ve made that choice?

Andrew Snyder-Beattie: Wow, OK.

CBE: We’ve spoken about this before. I understand scientifically why a neurologist has to make certain choices and act upon them—a person’s life is at stake. But as a plebe ingesting a constant influx of choices and positions, I often feel an impossibility of choice. Whereas you confidently say it’s important, for yourself, to make one choice and continue, as otherwise nothing would ever get done.

ASB: Well, I don’t know about “confidently.” Something came into my head when you were telling the story about Icarus: the Future of Humanity Institute sometimes gets a lot of flack for being “against technology” or is basically accused of being Luddite. And I find that amusing because there’s a strong transhumanist undercurrent in a lot of our thought. There are intuitions saying there’s a natural order of things, and that humanity shouldn’t disrupt this natural order. Icarus in some ways embodies this. He tries to do something ambitious and gets burned for it. I would look at this problem, pick it apart, and ask, “What are the possible outcomes, and how good or bad are those outcomes?” And “good” or “bad” probably shouldn’t rely just on what nature has given us. This is a long way of saying that there are certain technologies—like anti-aging technology—which people use as examples for bringing up the hubris interpretation by saying it’s ridiculous for people to be working to cure death; it’s not natural. That this is the peak of hubris.

CBE: Right.

ASB: I’m inclined to say: Throw a bunch of thought experiments at it. Is this something that you’re really willing to accept? Nick Bostrom, Director of the Future of Humanity Institute, and Research Fellow Toby Ord use a classic reversal test: Imagine the status quo as the opposite. Let’s imagine we live in a world in which every generation lives five years longer than the previous generation. But unfortunately, that’s not the natural order of things. If we implemented a euthanasia plan, we could save one trillion dollars, each generation. Would you just start killing people at the end of their eighty years in order to save this money? No one would do that! It would be considered totally inhumane.

And then, in contrast, let’s say we invest a trillion dollars in anti-aging research. We could then achieve five years of extra life in each generation. People would suddenly say that’s ridiculous. So it seems odd to have different answers to these two different thought experiments. It seems like we should be in a similar situation in response to these two ideas.

CBE: The possibility of a double bind seems totally absurd in this case. What’s the possibility that this fear of hubris could hold back major biological and technological advancements? I find it interesting that there is a heavy transhumanist strain of thinking not only in your department but throughout existential risk management. If we acknowledge that there were Neanderthals, and the Neanderthals died off because they were unable to cope in the same way as we Homo sapiens were, then at what point do post-humans become “us” and we become the Neanderthals? And where does that put you, as someone who is defending humanity? Do you continue to save “humanity,” or do you accept that it has transformed into something else?

ASB: I think that if humanity transformed into something else, that’s not necessarily something I would object to, depending on what that transformation is. When one says to “defend humanity,” it is important to understand what are the fundamental values that we ascribe to life. What makes life worth living? We think that humanity has value, and we think that human lives have value. We also think that animals have value, right? We think it would be morally reprehensible to torture animals. So “value” isn’t just limited to Homo sapiens. It extends to less advanced life forms, and presumably more advanced life forms as well.

CBE: You’re suggesting cohabitation?

ASB: Yes, that’s right.

CBE: I would argue that animals’ quality of life and their happiness has decreased globally. In your personal line of thinking, how much room is there for things like happiness or sorrow in terms of the survival of humanity?

ASB: Ideally, that’s what it should all be about. The caveat is obviously that I would like humanity to survive in a happy state. I think that cohabitation is certainly physically possible. Animals are not necessarily suffering because we exist. It just so happens that we have economic and political structures that allow for factory farming, all sorts of environmental degradation, and other things that are really bad for animal welfare. I don’t think these things are a necessity of human life. I think there could be alternate arrangements in which animals benefit from the existence of humanity. One of the more controversial debates at the moment is the question whether humans should intervene in the suffering of wild animals.

CBE: Right.

ASB: The idea that we should genetically engineer wild animals, so they don’t prey on one another is obviously very theoretical and could be really bad for the ecosystem, so no one is seriously proposing this. But as a thought experiment, it’s good in terms of separating out our intuitions about what’s valuable about nature and what’s valuable about the components within an ecosystem.

CBE: This question was being asked around six or seven years ago: Should we let pandas die out? When pandas are depressed, they eat more, and both of these things contribute to a decrease in libido, and then they don’t want to procreate. I just think it’s fucked up for us to make that decision for the pandas: to what extent are they depressed because they live on a planet where we are at the top of that decision-making hierarchy? How much do you factor in something like power imbalance?

ASB: As far as our relationship to artificial intelligence is concerned, presumably we’re going to be the ones deciding and creating this intelligence. We humans would have some agency over our successors, hopefully. It is a different situation from the pandas—where they experience us as an external force coming in—that would be more similar to aliens invading and deciding what to do with us.

CBE: Yeah. Who gets to decide?

ASB: Some people have this view that human life is actually not worth living and that to exist is to be in a state of suffering. But philosopher Derek Parfit points out is that even in a world where we think that human existence is “net bad,” we should still try to prevent existential risks—simply because there is a possibility of life having positive value in the future. So there’s this option that we could create something that experiences really amazing well-being in the future. Maintaining that is actually worth quite a bit. That’s another reason to prevent human extinction. I agree with this. I imagine that in the future there could be beings experiencing radically more well-being than we do in our current state. And this might be something to work for.

CBE: I would agree with you—but from the standpoint that I want to be one of those advanced beings. You’ve said that humans are bad at accounting for the welfare of future generations. But you seem to have this perspective of trying to account not just for yourself but whatever is to come. Within the Institute you aim to think about humanity 20,000 years from now, instead of twenty or fifty or one hundred years in the future. I’m still thinking from a really selfish perspective! There’s a Google superintelligence that was trotted out recently, and it was asked “What is the meaning of life?” And it answered: “To live forever.” This is an answer that I can get down with—live to keep living.

ASB: Nick wrote an article called “Astronomical Waste: The Opportunity Cost of Delayed Technological Development.” I really love this paper, because at first he’s arguing that the faster technology progresses, the bigger the benefits, much bigger than the typical person realizes. If you have this view that adding more lives that have high levels of well-being is good, then each second of delay in space colonization means a huge potential loss. But then Nick argues that slowing technological progress down by ten million years, if it increases the chance of eventual space colonization by 1 percent, is probably worth it from this perspective. We think technology is really, really good for humanity, but it has all these potential pitfalls that could be existential risks. This means that there’s a tension between people that want to have the benefits of those technologies as soon as possible and those that think that future generations ought to take priority.

CBE: That goes against accelerationist theories that encourage expansion and Moore’s law (a computing theory that the number of transistors able to fit on a microchip would double every two years). Well, it acknowledges Moore’s law but says that there are benefits to slowing it down.

ASB: Maybe. Things get complicated. Things seem to be getting better over time. Violence is declining. There are more human rights around the world, feminism, literacy—we’ve made a lot of progress. It could be that some of this progress is tied to technological advances. The claim is that if slowing down technology makes things safer, then maybe it’s good to do that. It’s unclear whether or not that’s true. It could be that slowing down technology, in terms of what its real world implications would be, would be bad, especially when you consider how technologies interact. Certain technologies you want to accelerate, because they actually mitigate the risks of other technologies. Getting an asteroid deflection program is just good, We want that as fast as possible. Getting space colonization is something we want as fast as possible. Getting vaccines is something we want as fast as possible. So there are certainly technologies that would be good not only for us but for the benefit of future generations as well. So accelerating those technologies certainly seems worthwhile. From a moral perspective, there is this tension between being selfish and wanting all these amazing medical technologies now, and the kind of risks that really radical future technologies might hold if we go too fast without thinking about it.

CBE: Earlier we were talking about the 2015 movie The Martian, and you proposed an idea which is very much at the center of the video that I’m working on: The utilitarian as protagonist. We first talked about this in reference to the character of Romilly in the 2014 movie Interstellar and you said “I don’t know why the utilitarian always gets a bad rap.” He gets killed off. But you like The Martian, because it goes the opposite route. They risk the lives of four people to save one. Because of the advances that he made whilst on Mars, if saving him then helps us to save all of humanity when we have to colonize Mars, then we benefit from his information. So maybe inventor and futurist Raymond Kurzweil’s, or my, or whomever’s hubris or trying to fly too close to the sun actually provides a level of information. So if a bystander is paying attention, they might see, “Oh god, Icarus’ wings started melting when he was at XYZ proximity to the sun. But until that point he was actually doing alright, so maybe we can try that for a little bit.” Matt Damon did tape everything . . .

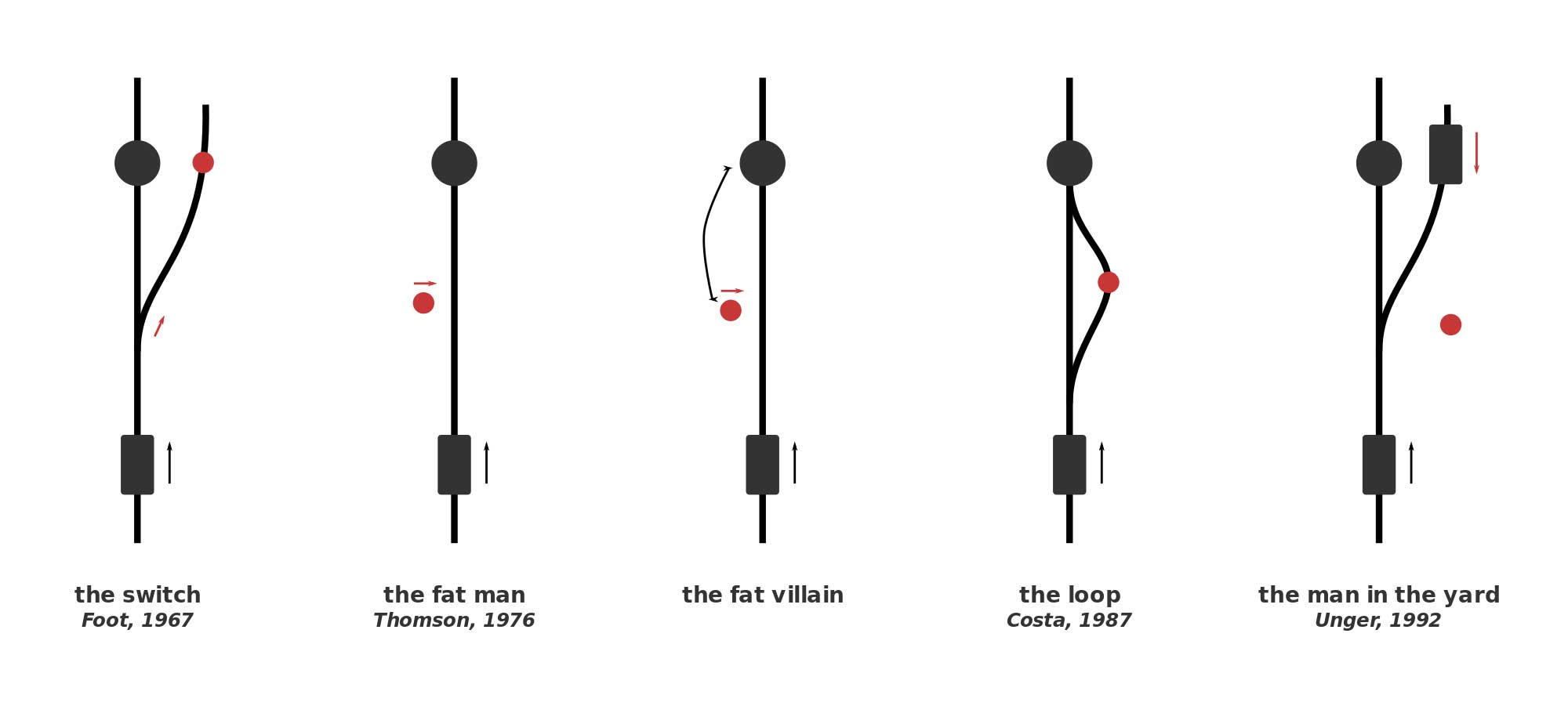

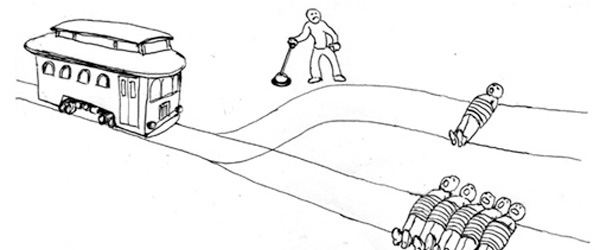

ASB: I really like that. I guess the thing about utilitarianism is that it’s difficult to work out in practice. Acting in a way that is traditionally thought of as being “ethical” is usually the right call. There are all these examples within the “trolley problem” thought experiment, in which there’s a runaway train, and if you push a fat person under the tracks to stop it, five lives will be saved. Do you push the fat person under the track? In this thought experiment, there are no consequences. It’s an isolated box. But in the real world, living in a society where it’s OK to push a person under the tracks is not going to lead to a good outcome.

CBE: It alters the value system and punctuates the equation of who should be valued and who shouldn’t. I was so happy that you turned me on to the trolley problem. I went down a little bit of a hole and also discovered “the fat villain.” The idea that the big person is also the person who tied the five people to the tracks. Is it ethically OK to then to push that person onto the track to save them?

ASB: Right. Most people think that it is OK.

CBE: What do you think?

ASB: I think it’s OK in both scenarios.

CBE: Really??? To push the person onto the tracks???

ASB: In the super isolated, super sterile environment of the thought experiment, yes, I think that would be the right thing to do. That being said, I wouldn’t actually do that in real life.

CBE: What you’re coming up against is that in the sterile environment of an imagined scenario, the right answer is to do that. But with all the messiness of reality . . . it’s a setup for failure. It’s a conclusion I’m applying to large corporations or hyper systems like government or whatever might emerge out of the combination of these two. They would consistently be acting under this umbrella, the auspice of a greater good, an imagined, perfect bubble scenario. The reality of such decision-making would cause an avalanche of problems and scenarios impossible to overcome on a human level.

ASB: Did we talk about scope insensitivity the last time we talked?

CBE: No.

ASB: OK. So one argument is that we should do the utilitarian thing, because utilitarianism is correct. There is another argument that says even if utilitarianism is correct, acting in a utilitarian way does not really get you there, so the best thing to do in practice is still just be a good person—do not push people onto the train tracks and do the thing that is obviously good. However, an argument that cuts back against this is that doing what’s obviously good is not something that humans have evolved to do. This becomes most clear when we talk about scope insensitivity. A number of psychologists did interviews with people and asked, “How much would you be willing to pay to save 2,000 birds from an oil spill?” The average answer was something like roughly $80. They asked another big group of people “How much would you pay to save 200,000 birds from an oil spill?” The average answer was about $80.

Humans have not evolved to think about big numbers. When we were hunter-gatherers, no one had the ability to influence large numbers of people. That’s starting to change, as far as the large numbers of people that could be living in the future, but also in terms of the large numbers of people living in the present. So when we are making policies that affect thousand or millions of people’s lives, I think there might potentially be more room for the sort of utilitarian calculus that might help us figure out how we make the world a better place.

CBE: You had described the idea of intergenerational problems vs. personal problems. How much of a threat is individualism to humanity?

ASB: Yeah, pretty big maybe. Especially as technology is getting more and more powerful. I guess it depends what you mean by, when you say individualism . . .

CBE: In the populous, media sense, that each individual is unique and special. In the sense that every generation since the 1980s has been called the “me generation,” and also individualism as a concept, like humanism.

ASB: I think it doesn’t call for much charity, and that’s too bad. I don’t know, maybe it encourages people to be more creative and take bigger risks as far as pursuing what they think is a meaningful life.

CBE: Taking your birds/oil spill analogy, I’ll go ahead and make an easy link between individualism and capitalism here. What is the probability that capitalism could at some point eclipse other major existential risks, like climate change or artificial intelligence? How much of an existential risk is capitalism?

ASB: It certainly doesn’t seem to be an ideal system, that’s for sure. Capitalism is an existential risk in so far as it leads to something that would be permanently bad. It could be directly leading to an AI catastrophe or Climate Change, something that actually leads to human extinction—if it were to somehow become a permanent system on earth. I guess I’m more concerned about the former rather than the latter. It’s much better to tackle the individual issues rather than try and change the global world order. It would be great if we could come up with a replacement for capitalism, but it has its costs and its benefits. It’s unclear what would happen after that.

CBE: There’s also the question of whether whatever system replaces capitalism—one that we’ll transition to because of capitalism’s failure—will have a better outcome, be any more or less successful?

ASB: It seems very unlikely that any global system is permanent. Capitalism has honestly only been around for a few hundred years. We have experimented with lots of different things. We have lived in hunter-gatherer bands. We have lived in empires. We have lived in all sorts of systems. If we really zoom out, it seems very unlikely what we have stumbled upon in the past century or so is going to somehow be the permanent state of affairs for the next 20 to 30,000 years. Right? Capitalism is one of these things that is a problem, but a problem I want future generations to worry about. I want to deal with the problems which determine whether or not future generations will be there in the first place.

CBE: Do you think the time we are living in is unique?

ASB: I think it’s incredibly unique.

CBE: Why?

ASB: We discovered the atomic bomb seventy years ago. That marks a unique time in history. We are one of the first generations living in a world in which we can wipe ourselves out. Within the next few hundred years I imagine there’s going to be another technology that could radically change the human condition: technologies that could potentially change the human body, that could change what society looks like. We have the potential for other technologies which could be extremely dangerous and could also lead to these sorts of existential risks. If we survive this transition period—of getting these technologies and learning how to handle them—then there’s potential for a really bright and long lasting future. I’m not the only one who thinks that this is potentially the make-or-break century, and that we are living in a very special time. This increases the urgency for us to work on this. This isn’t going to be an opportunity that future generations get, whether or not the problems are resolved or existing.

CÉCILE B. EVANS is a Belgian American artist based in London. She is the 2012 recipient of the Emdash Award (now Frieze Artist Award) and the 2013 recipient of the PushYourArt Prize, resulting in the commission of a new video work for the Palais de Tokyo, Paris. She is the creator of AGNES, the first digital commission for the Serpentine Galleries (curated by Ben Vickers), a project which has grown internationally across platforms.

Upcoming solo exhibitions include Kunsthalle Winterthur, CH (2016), De Hallen Amsterdam, NL (2016), and the Kunsthal Aarhus, DK (2016). In 2016 she is participating at the 9th Berlin Biennale for Contemporary Art and the Moscow International Biennale for Young Art. Current and recent exhibitions include MOCA Cleveland, US (2016); Bielefelder Kunstverein, DE (2016, solo exhibition); 20th Biennale of Sydney, AU (2016); Kunsthalle Wien (2016). In 2014 she had a solo exhibition at Seventeen Gallery, London, and was shortlisted for the Future Generation Art Prize (PinchukArtCentre, Kiev). She is a 2015 recipient of the Andaz Award. Group exhibitions include Inhuman (2015, Fridericianum, Kassel, DE), TTTT (2014, Jerwood Visual Arts Foundation, London), La Voix Humaine (2014, Kunstverein Munich, DE), Phantom Limbs (2014, Pilar Corrias Gallery, London), and CO-WORKERS (2015, Musée d’art Moderne, Paris). Recent screenings include the New York Film Festival (Projections), US; ICA London, V&A London, BFI London, and Hamburg Film Festival. A number of grants and residencies have supported her work, such as Wysing Arts Centre, Cambridge, UK, Alfried Krupp von Bohlen und Halbach-Stiftung, Schering Stiftung, and the Arts Council England.

ANDREW SNYDER-BEATTIE is Director of Research at the Future of Humanity Institute at the University of Oxford, where he coordinates the Institute’s research activities, recruitment, and academic fundraising. While at FHI, Snyder-Beattie obtained over $2.5 million in research funding, led the FHI-Amlin industry research collaboration, and wrote editorials for The Guardian, Ars Technica, and the Bulletin of the Atomic Scientists which have been received by a total of over 500,000 readers. His personal research interests currently include ecosystem and pandemic modeling, anthropic shadow considerations, and existential risk. He holds a Master of Science in biomathematics and has done research in a wide variety of areas such as astrobiology, ecology, finance, risk assessment, and institutional economics.

IMAGES: second from above: Andrew Snyder-Beattie, courtesy of the Future of Humanity Institute, Oxford; third from above: Pieter Bruegel the Elder: Landscape with the Fall of Icarus, circa 1555–68, now seen as a good early copy of Bruegel’s original; sixth from above: courtesy of the Future of Humanity Institute, Oxford